Deploy Tic-Tac-Toe game CI/CD on GithubActions and Amazon EKS

Deploy Tic-Tac-Toe on DevsecOps Pipeline in GitHub Actions and AWS EKS

Table of contents

- Prerequisites

- A> Launch EC2 Instance

- B> Create IAM ROLE (For attaching to EC2 instance above)

- C> Attach the Role to the EC2 instance

- D> Install all the required tools on the EC2 Instance

- E> Login to Sonar Dashboard

- F> Integrate the Sonarqube with the GitHub Actions

- G> Create the secrets in Github Actions

- G> Run build script in Github Actions

- H> Creation of the Self-Hosted Runner and Adding to the EC2 instance

- I> Terraform Infra Modifications

- J> Focus on the "Build Job" of the build.yml

- K> "Deploy Job" of the build.yml

- L> Deploy to the EKS

- M> Setting Up Slack notifications

- N> Destroy Job in build.yml

- O> Remember to delete your EC2 instance

🚀 Just Released: CICD GithubActions for Tic-Tac-Toe Game! 🎮

I'm excited to share my latest project - a classic Tic-Tac-Toe game implemented by GitHub Actions for full-fledged CICD. If you're a fan of nostalgic games and coding challenges, you'll love this!

🌟 Key Tools and Moments 🌟

- Implementing GitHub Actions Pipeline

- Adding the GitHub Actions Secrets

- Self-Hosted Runner (Creation of Self-Hosted Runner)

- Cascading Jobs in Github Actions

- EC2 Instance (Ubuntu 22.04 t2-medium 20GB Storage)

- Terraform (to build EKS)

- Installing AWS CLI for using eksctl

- Sonarqube (Configured via Docker)

- Install npm (To get all tic-tac-toe dependencies installed)

- Trivy (Used for File Scanning and Trivy Image Scanning)

- Elastic Kubernetes Serice [E.K.S to deploy our game in it]

- Slack Integration (to notify users about the status of the deployment)

Just need to follow along with the blog to run the app successfully. Relax if you don't know any of the tools in it I got you covered.

Prerequisites

Clone the app from the below repository

A> Launch EC2 Instance

Details for the Instance are ==>

name => githubactions_eks

type ==> Ubuntu [22.04] t2 medium

other details ==> increase the storage to 20GB

Mandatory Ports to keep open

3000 (for app to run)

9000 (for Sonarqube dashboard)

22, 443, 80

3**** (Generated randomly while creating the EKS Cluster)

Create the Instance

B> Create IAM ROLE (For attaching to EC2 instance above)

IAM > Role > Create Role > Entity Type => AWS Service and Use Case => EC2

Along with that add policies to it like

AWS EC2FullAccess

AWS S3FullAccess

AWS EKSFullAccess

AWS AdministartorAccess (Do this for ease of convenience)

Add a role name ==> Create that role ==> The role will be created

C> Attach the Role to the EC2 instance

EC2 Console >Select the githubactions_eks instance > In Top Nav select "Actions" > Security > Modify IAM Role

Add your newly created role above to the Instance

D> Install all the required tools on the EC2 Instance

Connect via SSH or connect via the EC2 Instance Connect

# Install Docker

sudo apt-get update

sudo apt install docker.io -y

sudo usermod -aG docker ubuntu

newgrp docker

sudo chmod 777 /var/run/docker.sock

# Run Sonarqube on Docker as container

docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

# Install Java

#!/bin/bash

sudo apt update -y

sudo touch /etc/apt/keyrings/adoptium.asc

sudo wget -O /etc/apt/keyrings/adoptium.asc https://packages.adoptium.net/artifactory/api/gpg/key/public

echo "deb [signed-by=/etc/apt/keyrings/adoptium.asc] https://packages.adoptium.net/artifactory/deb $(awk -F= '/^VERSION_CODENAME/{print$2}' /etc/os-release) main" | sudo tee /etc/apt/sources.list.d/adoptium.list

sudo apt update -y

sudo apt install temurin-17-jdk -y

/usr/bin/java --version

# Install Trivy (For file and image scanning)

sudo apt-get install wget apt-transport-https gnupg lsb-release -y

wget -qO - https://aquasecurity.github.io/trivy-repo/deb/public.key | gpg --dearmor | sudo tee /usr/share/keyrings/trivy.gpg > /dev/null

echo "deb [signed-by=/usr/share/keyrings/trivy.gpg] https://aquasecurity.github.io/trivy-repo/deb $(lsb_release -sc) main" | sudo tee -a /etc/apt/sources.list.d/trivy.list

sudo apt-get update

sudo apt-get install trivy -y

# Install Terraform (To build our EKS Cluster)

sudo apt install wget -y

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

# Install kubectl (to run eksctl on EC2 instance)

sudo apt update

sudo apt install curl -y

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

# Install AWS CLI (CLI to access EKS)

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt-get install unzip -y

unzip awscliv2.zip

sudo ./aws/install

# Install Node.js 16 and npm (For project dependencies installation)

curl -fsSL https://deb.nodesource.com/gpgkey/nodesource.gpg.key | sudo gpg --dearmor -o /usr/share/keyrings/nodesource-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/nodesource-archive-keyring.gpg] https://deb.nodesource.com/node_16.x focal main" | sudo tee /etc/apt/sources.list.d/nodesource.list

sudo apt update

sudo apt install -y nodejs

Verify all the tools are installed properly by using

docker --version

trivy --version

terraform --version

aws --version

kubectl version

node -v

java --version

E> Login to Sonar Dashboard

Load on Browser using the <ec2-public-ip:9000>

Login to the sonar dashboard with credentials

username ==> admin

password ==> admin

Create a new password and get logged in

F> Integrate the Sonarqube with the GitHub Actions

Click on the Manually Block

Add the Project Display name as ==> Tic-tac-toe-game

On the Next page select ==> Github Actions

It will pop a set of commands to be executed

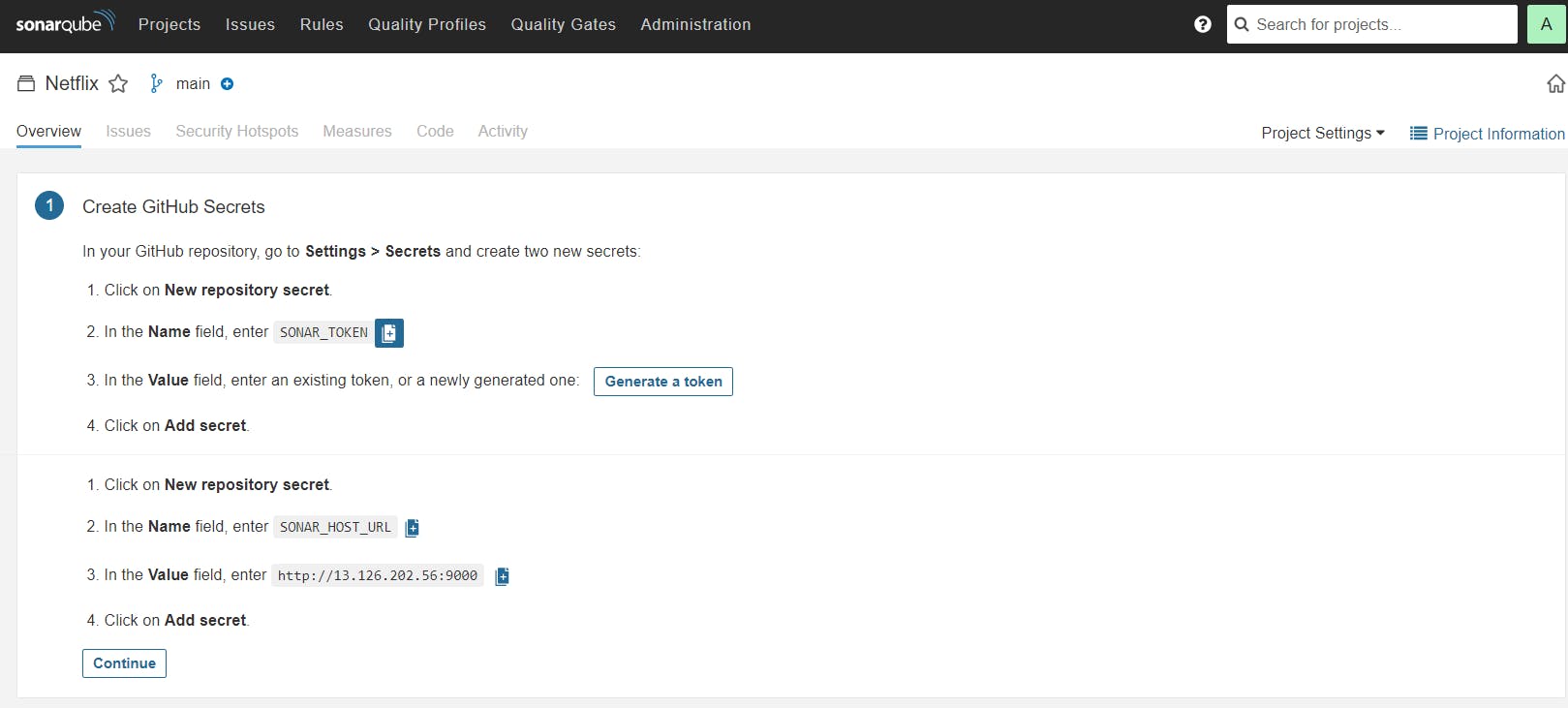

G> Create the secrets in Github Actions

Go to Repository > Hit "Settings" in top navigation > Select Secret and Variables in Side Navigation > Actions

It will open a page like this; just click the New Repository Secret from here

Copy the token generated here for the SONAR_TOKEN and SONAR_URL to the secret (as in the above image)

Remember: Once you pasted out a secret it can't be seen/edited again so keep a copy of it always if required elsewhere.

Once added it will look like this :

Get back to the sonar dashboard and move to the 2nd step

As our tic-tac-toe game is of type "React" select the other project here

The Sonar Project properties needed to be added to the GitHub repository again

Add the new file above as

Add sonar-project.properties file in it;

# Add the sonar-project.properties in it

sonar.projectKey=<Your--key--shown>

Also in 3rd Step add a file as shown with the name "build.yml" under the ".github/workflows" folder

Copy the content in the build.yml file here and commit it there.

Now that we want to run the GitHub Action

G> Run build script in Github Actions

GO the Repository ==> Select the "Actions" tab from the top nav ==> We could see that this is running out the buld.yml scripts with certain build steps which we have added in the file

Click on the build to see the steps involved

It should give out a build complete with "green tick" ==> successfully executed

We can refer to the sonar dashboard it will pop up with the analyzed report of the game

H> Creation of the Self-Hosted Runner and Adding to the EC2 instance

Runners are the host OS on which the script runs like Ubuntu, Windows, and Mac;

They are identified into two types "GitHub hosted" and "Self-hosted runner"

Github Hosted Runners are the runners that come with a default environment and set of tools already included in it like docker etc.

Self Hosted Runner came up with a more granular level of control it has no tools included by default like docker etc. We need to add it manually.

Goto Repository ==> Settings(Top navigation) ==> Actions (Side navigation) ==> New Self hosted Runners

We have our EC2 instance as Ubuntu so we will move with the commands of the Linux version

Copy all the set of commands from there < The Below commands are just for reference as the hash values may change according to your account>

# making the director y for actions-runner

mkdir actions-runner && cd actions-runner

# Downlaod the github action runner from the url

curl -o actions-runner-linux-x64-2.311.0.tar.gz -L https://github.com/actions/runner/releases/download/v2.311.0/actions-runner-linux-x64-2.311.0.tar.gz

# verify the hash via checksum

echo "29fc8cf2dab4c195bb147384e7e2c94cfd4d4022c793b346a6175435265aa278 actions-runner-linux-x64-2.311.0.tar.gz" | shasum -a 256 -c

# need to extarct the installer files which are now needed to install to run the runner

tar xzf ./actions-runner-linux-x64-2.311.0.tar.gz

# needed to configure the runner

./config.sh --url https://github.com/adityadhopade/tic-tac-toe-game --token ALRGQDE5HMGYBAWXESQMTXLFIHY2S

# command to start the runner everytime

./run.sh

While configuring the runner you may come across lots of subcommands like this do the following here.

After running the final command we can have it running like

I> Terraform Infra Modifications

Download the Repository into the Local and traverse to the folder

cd TIC-TAC-TOE/Eks-terraform

Changes in "provider.tf" ==> Set your region properly ; mine was ap-south-1

Changes in "backend.tf" ==> Add a unique bucket name

Add a folder "EKS" in that bucket create "EKS" folder; the purpose is to store the tf-state file which is a single source of truth for the Terraform

In "main.tf" \==> We are creating the Infra for the creation of the EKS

In this, we have added the components like

AWS IAM role

AWS IAM Policy Attachment

AWS VPC

AWS SUBNETS

EKS CLUSTER

EKS NODE GROUP

EKS NODE GROUP'S Role Attachment and IAM ROLE and various policies needed by it

Now run the commands in the current folder

terraform init

terraform fmt

terraform validate

terraform plan

terraform apply --auto-approve

NOTE: Infra will take around 15-20 minutes to create

After creation check for the Infra Created in the EKS Console ==> Cluster (In the region provided above)

Also, check for the Infra created in the EC2 Console ==> EC2 Instance created

J> Focus on the "Build Job" of the build.yml

We are triggering our build on each push/pull in the main repository; we can also add the manual workflow dispatch such that it cannot run unless we want to explicitly run the build.

As we want to use our self-hosted runner now so need to replace the "runs-on" value field with the self-hosted-runners name or label. I have used the label here set as "eks-GitHub-actions"

runs-on: [eks-github-actions]

The next step is to install all the dependencies of the tic-tac-toe game as it is a React project we will use npm to install all the dependencies

- name: Dependencies install using npm

run: |

npm install

Further, we want to use the vulnerability scanning of the game files using Trivy and save a report. This file will be saved under the actions-runner > _work >TIC-TAC-TOE> TIC-TAC-TOE

# It lets us scan the file as a whole and all the vulenarbilities reported

# Low, Medium, High all will be stored here in trivy_suggestions.txt

- name: Scan file using Trivy

run: trivy fs . > trivy_suggestions.txt

Will build our image using Docker; but first we need to create the DockerHub Personal Access Token and add it as a secret to GitHub Actions

Then just add the username and generated token to the GitHub Actions Secret (As we have done above also)

- name: Docker Build image

run: |

# Build the image with the current Docker File tagging it with "tic-tac-toe-game"

docker build -t tic-tac-toe-game .

# Retagging it with new name <DockerHub-Username>/tic-tac-toe-game:latest

docker tag tic-tac-toe-game adityadho/tic-tac-toe-game:latest

Logging in with the credentials of DockerHub; so we need to use the PAT for the password field

- name: Login to DockeHub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

Push the image to the DockerHub

- name: Push Image to DockerHub

run: docker push adityadho/tic-tac-toe-game:latest

Compositely it looks like this

- name: Docker Build image

run: |

docker build -t tic-tac-toe-game .

docker tag tic-tac-toe-game adityadho/tic-tac-toe-game:latest

env:

DOCKER_CLI_ACI: 1

- name: Login to DockeHub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Push Image to DockerHub

run: docker push adityadho/tic-tac-toe-game:latest

Again we are checking the image for the vulnerability scan after building the image; performing the image scan helps to get the vulnerability scan for images.

- name: Scanning image using Trivy

run: trivy image adityadho/tic-tac-toe-game:latest > trivy_image_scan_report.txt

This is how the overall "BUILD JOB" implementation looks like

name: Build

on:

push:

branches:

- main

jobs:

build:

name: Build

runs-on: [eks-github-actions]

steps:

- name: Checkout code

uses: actions/checkout@v2

with:

fetch-depth: 0 # Shallow clones should be disabled for a better relevancy of analysis

- uses: sonarsource/sonarqube-scan-action@master

env:

SONAR_TOKEN: ${{ secrets.SONAR_TOKEN }}

SONAR_HOST_URL: ${{ secrets.SONAR_HOST_URL }}

- name: Dependencies install using npm

run: |

npm install

- name: Scan file using Trivy

run: trivy fs . > trivy_suggestions.txt

- name: Docker Build image

run: |

docker build -t tic-tac-toe-game .

docker tag tic-tac-toe-game adityadho/tic-tac-toe-game:latest

env:

DOCKER_CLI_ACI: 1

- name: Login to DockeHub

uses: docker/login-action@v3

with:

username: ${{ secrets.DOCKERHUB_USERNAME }}

password: ${{ secrets.DOCKERHUB_TOKEN }}

- name: Push Image to DockerHub

run: docker push adityadho/tic-tac-toe-game:latest

- name: Scanning image using Trivy

run: trivy image adityadho/tic-tac-toe-game:latest > trivy_image_scan_report.txt

K> "Deploy Job" of the build.yml

Now we need the first job to run successfully and then after we want to trigger the Deploy Job; so we need to create the dependency between them using the "needs" and providing it with the name of the job above i.e. build

needs: deploy

or

needs: [deploy]

timeout-minutes here is used to define the constraint of time for the particular job involved (in minutes)

timeout-minutes: 10

It also runs on our self-hosted runners "eks-GitHub-actions"

runs-on: [eks-github-actions]

Pull the Docker image from the DockerHub

- name: Pull docker images

run: docker pull adityadho/tic-tac-toe-game:latest

Scan the image pulled with the help of trivy image scanner

- name: Scan image using Trivy

run: trivy image adityadho/tic-tac-toe-game:latest > trivy_pull_image_scan.txt

Now we have to run the container in the EC2 instance to check if it is running properly.

- name: Deploy to container

run: |

docker run -d --name game -p 3000:3000 adityadho/tic-tac-toe-game:latest

For the first time, this part will run smoothly; but what if we manually delete the container; we need to add the "guards to the code" like

- name: Check if container exists

id: check_container

run: |

if docker ps -a | grep game; then

echo "Container exists"

echo "::set-output name=container_exists::true"

else

echo "Container does not exist"

echo "::set-output name=container_exists::false"

fi

shell: bash

REMEMBER: Add this part "Remove Container" only when you want to delete the container if not make it commented for now or Want to destroy the structure as a whole (after implementing the destroy job)

- name: Remove Container

if: steps.check_container.outputs.container_exists == 'true'

run: |

docker stop game

docker rm game

shell: bash

For now, it will look like this

- name: Check if container exists

id: check_container

run: |

if docker ps -a | grep game; then

echo "Container exists"

echo "::set-output name=container_exists::true"

else

echo "Container does not exist"

echo "::set-output name=container_exists::false"

fi

shell: bash

- name: Remove Container

if: steps.check_container.outputs.container_exists == 'true'

run: |

docker stop game

docker rm game

shell: bash

- name: Deploy to container

run: |

docker run -d --name game -p 3000:3000 adityadho/tic-tac-toe-game:latest

We can verify the application running at the

<ec2-publc-ip:3000>

But we wanted it to deploy to the EKS

L> Deploy to the EKS

For this, we need to first update the local Kube config file which is present under the ".kube" folder (not manually of course)

use the command from below

- name: Update kubeconfig

run: aws eks --region <cluster-region> update-kubeconfig --name <cluster-name>

So changes in my system which I have implemented are like this

- name: Update Kubeconfig

run: aws eks --region ap-south-1 update-kubeconfig --name EKS_CLOUD

Further now we need to deploy to the AWS EKS Cluster; we have created a file named deployment-service.yml in local

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: tic-tac-toe

spec:

replicas: 1

selector:

matchLabels:

app: tic-tac-toe

template:

metadata:

labels:

app: tic-tac-toe

spec:

containers:

- name: tic-tac-toe

image: adityadho/tic-tac-toe-game:latest # your image name here

ports:

- containerPort: 3000 # Use port 3000

---

apiVersion: v1

kind: Service

metadata:

name: tic-tac-toe-service

spec:

selector:

app: tic-tac-toe

ports:

- protocol: TCP

port: 80 # Expose port 80

targetPort: 3000

type: LoadBalancer

For deploying use the commands

- name: Deploy to EKS

run: kubectl apply -f deployment-service.yml

Further, if you want to destroy the service and the deployment created by the above program then can replace the following code as

What we are doing here is checking if the services and deployment already exist we are going to delete it

- name: Deploy to K8's

run: |

# Delete the existing deployment if it exists

if kubectl get deployment tic-tac-toe &>/dev/null; then

kubectl delete deployment tic-tac-toe

echo "Existing deployment removed"

fi

# Delete the existing service if it exists

if kubectl get service tic-tac-toe-service &>/dev/null; then

kubectl delete service tic-tac-toe-service

echo "Existing service removed"

fi

kubectl apply -f deployment-service.yml

M> Setting Up Slack notifications

[MUST] Follow this link for basic implementation

Basic Slack Channel Implementation:

Then Click on your name > Setting and Administration > Manage Apps

Click on Top Navigation Build

Click on Create an app

From Scratch > Add the App name and select the workspace name as the channel you have recently created

Select incoming webhooks as we want to integrate them with the GitHub actions and further add them as the Secret in Github Actions

Toggle to activate the Incoming Webhooks

Click on the Add New Webhook to Workspace

Select your channel name created for this purpose

Copy the URL and add it as the secret in GitHub Actions

The below code will act as the workflow for the Slack Notification to trigger

We want to trigger Slack notifications always (regardless of success or failure) we are always using it as

if: always()

It uses the act10ns/slack action (we are just using it) and we pass it the live status if it is failing or succeeded

Change the channel name according to your name

Added the SLACK_WEBHOOK_URL as Environment variable

- name: Send a Slack Notification

if: always()

uses: act10ns/slack@v1

with:

status: ${{ job.status }}

steps: ${{ toJson(steps) }}

channel: '#your-channel-name'

env:

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOK_URL }}

The final flow of the jobs will look as follows in the Deploy Job

deploy:

needs: build

timeout-minutes: 10

runs-on: [eks-github-actions]

steps:

- name: Pull docker images

run: docker pull adityadho/tic-tac-toe-game:latest

- name: Scan image using Trivy

run: trivy image adityadho/tic-tac-toe-game:latest > trivy_pull_image_scan.txt

- name: Check if container exists

id: check_container

run: |

if docker ps -a | grep game; then

echo "Container exists"

echo "::set-output name=container_exists::true"

else

echo "Container does not exist"

echo "::set-output name=container_exists::false"

fi

shell: bash

- name: Remove Container

if: steps.check_container.outputs.container_exists == 'true'

run: |

docker stop game

docker rm game

shell: bash

- name: Deploy to container

run: |

docker run -d --name game -p 3000:3000 adityadho/tic-tac-toe-game:latest

- name: Update Kubeconfig

run: aws eks --region ap-south-1 update-kubeconfig --name EKS_CLOUD

- name: Deploy to K8's

run: |

# Delete the existing deployment if it exists

if kubectl get deployment tic-tac-toe &>/dev/null; then

kubectl delete deployment tic-tac-toe

echo "Existing deployment removed"

fi

# Delete the existing service if it exists

if kubectl get service tic-tac-toe-service &>/dev/null; then

kubectl delete service tic-tac-toe-service

echo "Existing service removed"

fi

kubectl apply -f deployment-service.yml

current_directory=$(pwd)

echo "Current directory is: $current_directory"

- name: Slack Notification

if: always()

uses: act10ns/slack@v1

with:

status: ${{ job.status }}

steps: ${{ toJson(steps) }}

channel: '#github-actions-eks'

env:

SLACK_WEBHOOK_URL: ${{ secrets.SLACK_WEBHOOKS_URL }}

N> Destroy Job in build.yml

We are creating this job to destroy the terraform resources that were created in the EC2 instance for the AWS EKS Cluster creation and its NodeGroup along with some policies for respective infra creation

Rather than traversing to the folder manually and deleting it, we are just adding it to the job so that we can trigger it as per our needs.

For destroy it needs both the build and deploy to run first; (when there will be creation then only there can be deletion)

In the first step, we check out the current directory and what folder it is in

In the second step, we move our working directory to the path where our terraform file lies so setting up the working directory as

working-directory: /home/ubuntu/tic-tac-toe-game/Eks-terraform

We further need to destroy our terraform build by the command

terraform destroy --auto-approve

The Destroy job looks like as follows

destroy:

needs: [build, deploy]

runs-on: [eks-github-actions]

steps:

- name: Get current directory

run: |

current_directory=$(pwd)

echo "Current directory is: $current_directory"

- name: Destroy Terraform build

working-directory: /home/ubuntu/tic-tac-toe-game/Eks-terraform

run: |

terraform destroy --auto-approve

This sums up all the 3 Jobs and its working in the CICD pipeline using Github Actions

O> Remember to delete your EC2 instance

We have destroyed our terraform build but we have the EC2 resource yet to be destroyed

There are furthermore resources that need to be destroyed

The role we attached to the EC2 instance (helped us in creating the EKS, S3)

Destroy the Bucket created here (while the TF file would also have gone present inside the EKS folder)

I hope you find the content valuable and I would encourage folks to try out the demo and get your hands dirty; I am sure that it will work for you; It requires lots of time and effort to prepare this CICD Demo to make it work; If stuck take help of the Chatgpt or google it will surely help you out

Do follow my endeavors at